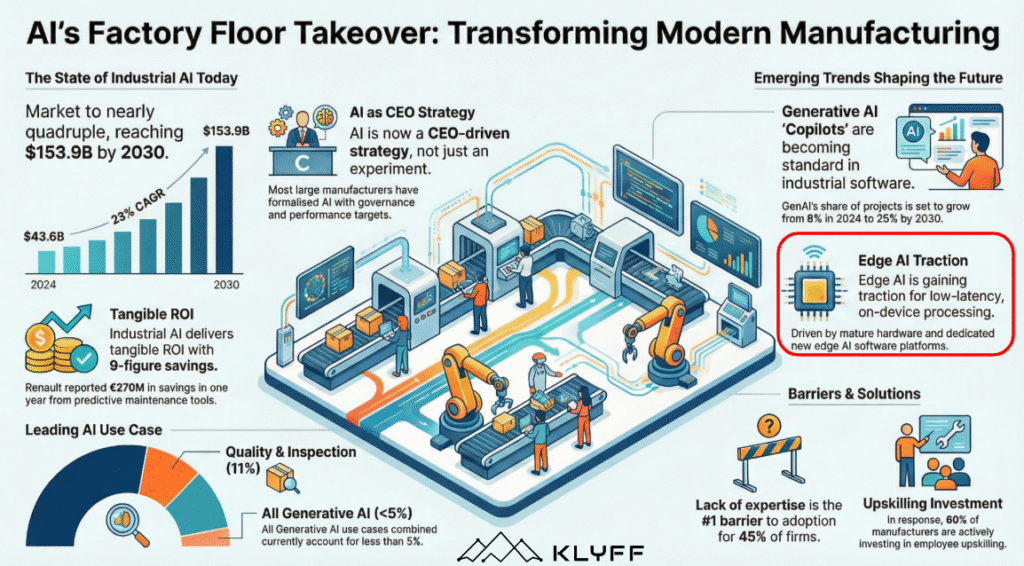

The manufacturing sector is undergoing its most significant shift since the introduction of the assembly line. As Industry 4.0 matures, the focus is transitioning from mere connectivity to “Edge Intelligence.” While cloud computing provided the initial storage and processing power for big data, the demand for real-time decision-making, reduced latency, and enhanced security has pushed AI models out of the data center and directly onto the factory floor. According to recent insights from IoT Analytics, Industrial AI is no longer a pilot project; it is a foundational component of the “Software-Defined Factory.”

Introduction: The Convergence of OT and AI

For decades, Operational Technology (OT) and Information Technology (IT) lived in silos. OT focused on programmable logic controllers (PLCs) and deterministic systems, while IT managed data and analytics. Edge AI is the bridge between these worlds.

Edge AI refers to the deployment of machine learning models directly on local hardware—such as gateways, cameras, or the machines themselves—rather than relying on a centralized cloud server. In manufacturing, where a millisecond of delay can result in a damaged tool or a safety hazard, the “Edge” is where the value is realized.

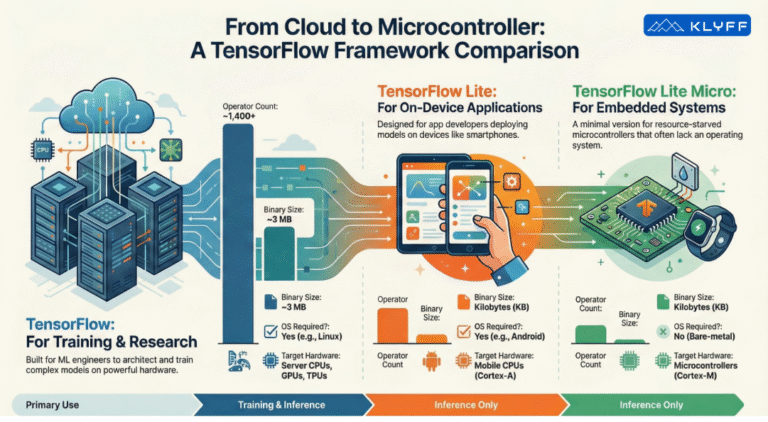

The Shift from Cloud to Edge

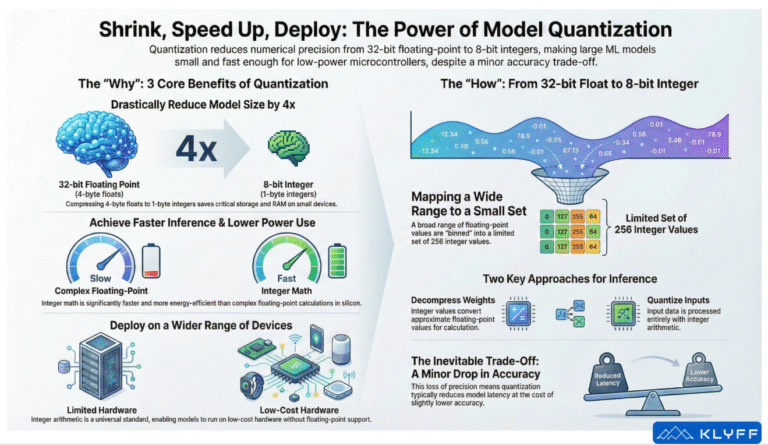

Industrial AI is moving toward the edge. While the cloud is excellent for training massive models on historical data, the execution (inference) is increasingly happening locally. This shift is driven by three factors:

- Latency: Real-time control loops require response times under 10ms.

- Bandwidth: Streaming high-definition video from 500 factory cameras to the cloud is cost-prohibitive.

- Privacy: Keeping proprietary process data within the factory walls mitigates cybersecurity risks.

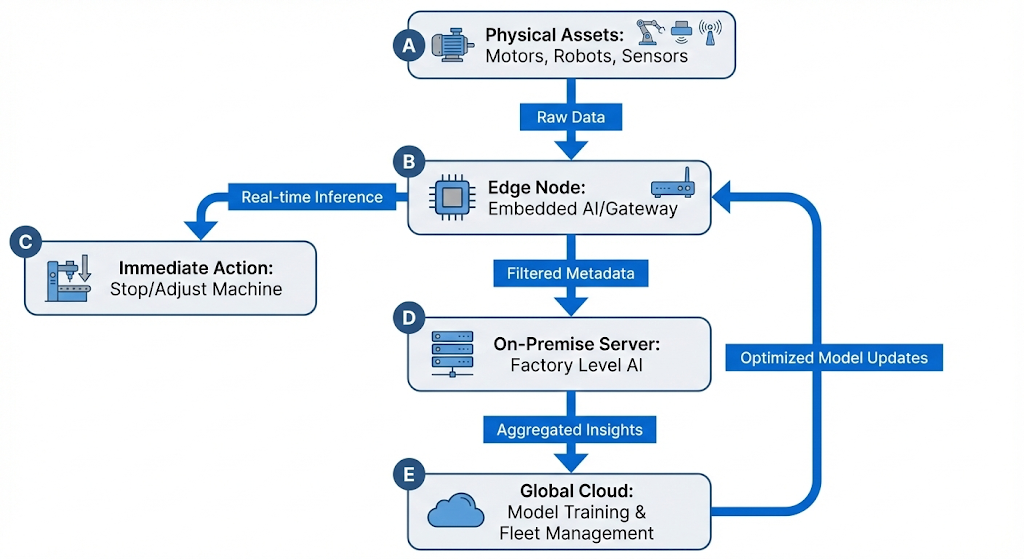

The Architectural Framework of Edge AI

To understand Edge AI, one must understand the data flow. Unlike traditional systems, where data moves in a linear path to the cloud, Edge AI creates a multi-tiered intelligence structure.

1. The Physical Layer (Data Origin)

The process begins at the bottom with Physical Assets like motors, robotic arms, and sensors. These devices generate massive amounts of “Raw Data”—high-frequency vibrations, heat readings, or high-definition video frames.

2. The Edge Node (Real-Time Intelligence)

The raw data is sent immediately to an Edge Node (an Industrial PC or AI Gateway). This is the most critical stage for operational speed.

- Real-time Inference: The AI model running on the gateway analyzes the data instantly.

- Immediate Action: If the AI detects a problem (like a tool about to break), it sends a command directly back to the machine to stop or adjust. This happens in milliseconds, bypassing the lag of the internet.

3. The On-Premise Server (Site-Level Insights)

Not all data needs to be acted upon instantly. The Edge Node strips away the “noise” and sends Filtered Metadata (summaries of what happened) to a factory-level server.

- This server looks at the “big picture” of the entire production line, identifying trends that a single machine might miss.

4. The Global Cloud (The Brain)

The On-Premise server sends Aggregated Insights up to the Global Cloud. The cloud is too slow for real-time machine control, but it has near-infinite storage and processing power.

- Model Training: Data scientists use the accumulated data from all machines across all factories to retrain and improve the AI models.

- Fleet Management: It allows headquarters to see the health of every factory globally in one dashboard.

5. The Feedback Loop (The “Brain” Updates the “Muscle”)

The most important part of this flow is the final arrow: Optimized Model Updates. Once the cloud has “learned” how to be more accurate based on new data, the improved AI model is pushed back down to the Edge Nodes. This ensures that the machines on the factory floor are always getting smarter without needing hardware changes. This is where the Klyff platform shines.

Let us see this with an example code – In this scenario, an industrial motor is generating high-frequency vibration data. Instead of sending every single reading to the cloud (which would be thousands of data points per second), the Edge device calculates a rolling average and only transmits data if it detects an anomaly (a deviation from the norm).

import time

import random

import numpy as np

# Configuration

THRESHOLD_Z_SCORE = 3.0 # Sensitivity for anomaly detection

WINDOW_SIZE = 20 # Number of readings to keep in local memory

CLOUD_SIMULATION_LOG = []

class EdgeDevice:

def __init__(self):

self.buffer = []

print("Edge Node Initialized. Monitoring Motor Vibration...")

def detect_anomaly(self, value):

"""Simple statistical anomaly detection (Z-Score)"""

if len(self.buffer) < WINDOW_SIZE:

return False

mean = np.mean(self.buffer)

std_dev = np.std(self.buffer)

if std_dev == 0: return False

z_score = abs(value - mean) / std_dev

return z_score > THRESHOLD_Z_SCORE

def process_reading(self, raw_value):

# 1. Store in local circular buffer

self.buffer.append(raw_value)

if len(self.buffer) > WINDOW_SIZE:

self.buffer.pop(0)

# 2. Perform Local Inference

is_anomaly = self.detect_anomaly(raw_value)

# 3. Decision Logic: Cloud vs. Local

if is_anomaly:

self.send_to_cloud(raw_value, "CRITICAL: Anomaly Detected")

else:

# We don't send normal data to save bandwidth

pass

def send_to_cloud(self, value, message):

timestamp = time.strftime("%H:%M:%S")

payload = {"timestamp": timestamp, "value": round(value, 2), "status": message}

CLOUD_SIMULATION_LOG.append(payload)

print(f" [CLOUD SYNC] --> {payload}")

# --- Simulation Run ---

edge_node = EdgeDevice()

for i in range(50):

# Simulate normal vibration (values around 10.0)

vibration = random.gauss(10.0, 0.5)

# Manually inject an anomaly at step 25

if i == 25:

vibration = 25.0

edge_node.process_reading(vibration)

time.sleep(0.05)

print(f"\nSimulation Complete. Total readings: 50 | Cloud transmissions: {len(CLOUD_SIMULATION_LOG)}")How this script maps to the Architecture

- High-Frequency Input: The

forloop simulates the Physical Asset generating data. - Local Buffer: The

self.bufferrepresents the Edge Node’s memory. It only keeps what is necessary for the immediate context. - The “Inference”: The

detect_anomalyfunction is a simplified version of a machine learning model. On a real factory floor, this might be a complex Neural Network. - Bandwidth Efficiency: Notice that even though 50 readings were generated, the “Cloud” only received one alert. This is the Filtered Metadata we discussed earlier.

Why this matters for ROI

If you have 1,000 sensors each sending 1KB of data per second, you are looking at nearly 2.5 TB of data per month. By using Edge filtering to only send anomalies, you can reduce the cloud storage and egress cost by over 99%, while still catching every critical failure.

Comparison of Industrial AI Layers

| Layer | Typical Latency | Bandwidth Demand | Primary Function | Hardware Examples |

| Physical Assets | < 1ms | Extremely High (Raw) | Data generation & mechanical execution. | Sensors, PLCs, Motors |

| Edge Node | 1ms – 10ms | High (Local) | Real-time inference, safety shut-offs, and data thinning. | NVIDIA Jetson, AI Gateways |

| On-Premise Server | 100ms – 1s | Medium (Metadata) | Plant-wide optimization and historical logging. | Industrial PCs, Local Data Centers |

| Global Cloud | > 1s (to minutes) | Low (Aggregated) | Heavy model training, fleet-wide analytics, and ERP integration. | AWS, Azure, Google Cloud |

Hardware Components

The proliferation of Edge AI is supported by a new generation of silicon:

- Microcontrollers (MCUs): For simple vibration or temperature analysis (TinyML).

- AI Accelerators (NPUs/TPUs): Specialized chips for deep learning inference.

- Industrial PCs (IPCs) with GPUs: Powerful edge nodes capable of running multiple high-speed computer vision streams.

Core Use Cases: Transforming the Factory Floor

Predictive Maintenance 4.0

Traditionally, maintenance was either reactive (fix it when it breaks) or preventative (fix it on a schedule). Edge AI enables Predictive Maintenance (PdM). By analyzing high-frequency vibration and acoustic data at the source, AI can detect “micro-anomalies” that precede failure by weeks.

- How it works: An edge device monitors a CNC spindle. It uses a Fast Fourier Transform (FFT) to convert sound waves into frequency data. A local model identifies a specific harmonic pattern indicative of bearing wear and alerts the operator immediately.

Automated Optical Inspection (AOI)

Computer Vision is perhaps the most visible application of Edge AI. High-speed cameras on assembly lines capture images of every product.

- The Edge Advantage: In a bottling plant moving at 1,000 units per minute, the AI must decide if a cap is crooked in less than 50ms. Edge AI processes this locally, triggering a “rejection arm” without needing a round-trip to a cloud server.

Autonomous Mobile Robots (AMRs)

Unlike older Automated Guided Vehicles (AGVs) that followed magnetic strips, AMRs use Edge AI for SLAM (Simultaneous Localization and Mapping). They process LIDAR and camera data in real-time to navigate around human workers and dynamic obstacles.

Energy Management and Sustainability

As energy prices fluctuate, Edge AI optimizes power consumption. AI models at the edge can predict peak load times and automatically throttle non-critical systems or shift loads to optimize against “Time-of-Use” electricity pricing.

The Role of Generative AI at the Edge

As noted by IoT Analytics, Generative AI is the newest frontier. While training a Large Language Model (LLM) requires a massive GPU cluster, “Small Language Models” (SLMs) are now being deployed at the edge.

Use Case: The AI Maintenance Assistant

An operator wearing an AR headset can point a camera at a malfunctioning machine. An Edge-based Vision-Language Model (VLM) identifies the component, retrieves the digital manual, and overlays step-by-step repair instructions—all without an internet connection.

Flow Analysis: Data Processing at the Edge

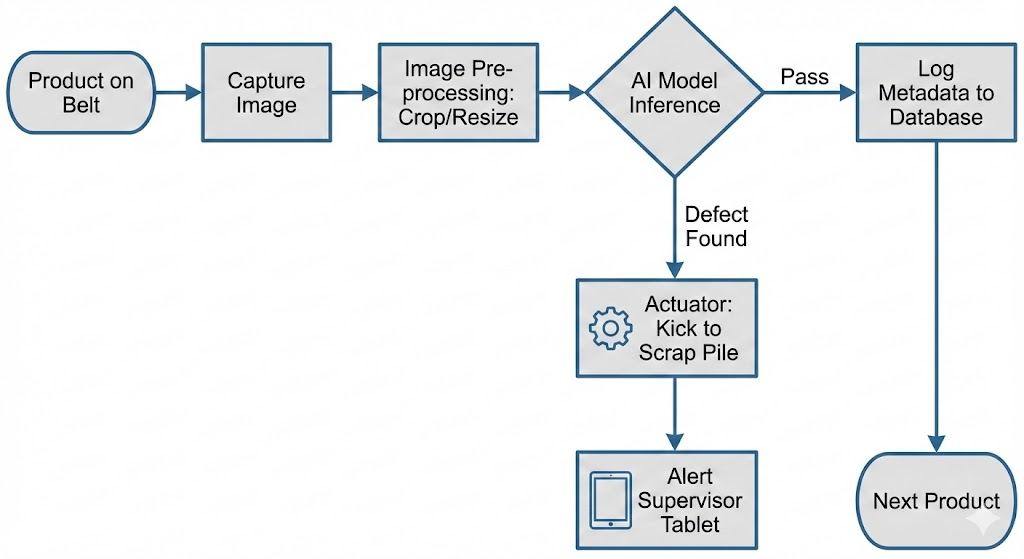

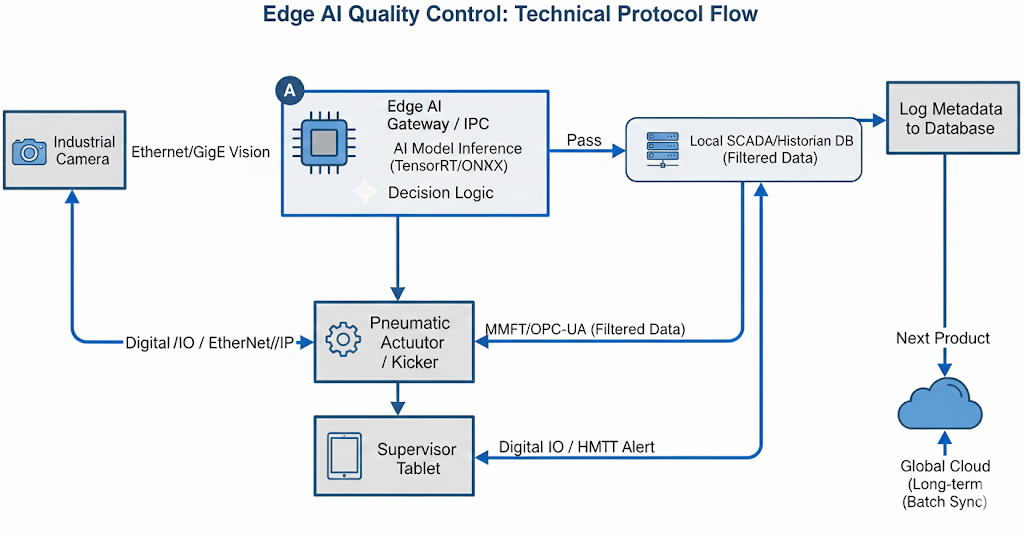

The following flow illustrates how a “closed-loop” Edge AI system operates in a quality control environment.

Flowchart: Edge AI Quality Loop

This flowchart illustrates a real-time Automated Optical Inspection (AOI) system powered by Edge AI. It details the decision-making loop that occurs on a high-speed manufacturing line to ensure quality control without human intervention.

Step-by-Step Process Breakdown

1. Input and Data Acquisition

- Product on Belt: The sequence begins when a physical item moves along a conveyor belt into the inspection zone.

- Capture Image: A high-speed industrial camera takes a photo of the product. At the “Edge,” this happens in milliseconds to keep up with the line speed.

2. Local Processing

- Image Pre-processing (Crop/Resize): Before the AI can “look” at the image, the raw data is cleaned up. This involves removing background noise, cropping to the area of interest, and resizing the file so the AI model can process it efficiently.

3. The “Brain” (Inference)

- AI Model Inference: This is the core of Edge AI. A pre-trained machine learning model analyzes the image to look for specific patterns—such as scratches, missing components, or incorrect dimensions.

4. The Decision Paths

The diamond-shaped decision node creates two distinct outcomes based on the AI’s analysis:

- Path A: Pass (No Defects)

- Log Metadata to Database: Information about the successful inspection is saved. This is the “filtered metadata” discussed in the architecture, used for long-term reporting.

- Next Product: The system resets to wait for the next item.

- Path B: Defect Found

- Actuator (Kick to Scrap Pile): The system triggers a physical mechanical action. A pneumatic arm or “kicker” immediately removes the faulty item from the production line.

- Alert Supervisor Tablet: Simultaneously, a digital notification is sent to a human supervisor so they can investigate if a machine is consistently producing errors.

Why this represents “Edge AI”

This flow is a perfect example of Edge AI because the Inference and the Actuator command happen locally. If the system had to send that image to a far-away cloud server to ask, “Is this product okay?”, the product would have already moved 10 feet down the belt before the answer came back. By keeping the “brain” next to the “eyes” (the camera), the factory can make split-second rejections.

Implementation Challenges: The “Brownfield” Problem

While a “Greenfield” (new) factory can be built with Edge AI in mind, 90% of manufacturing happens in “Brownfield” environments with machines that are 20–30 years old.

Challenge 1: Interoperability

Old machines use legacy protocols like Modbus or PROFIBUS. Edge AI gateways must act as translators, converting these protocols into modern formats like MQTT or OPC-UA before processing.

Challenge 2: Data Quality

AI is only as good as its training data. In a factory, sensors often get dirty or misaligned. “Data Drift” occurs when the physical environment changes (e.g., seasonal temperature changes affecting machine tolerances), requiring the models to be retrained or “fine-tuned” frequently.

Strategic ROI: Why Manufacturers are Investing

Measurable returns drive the investment in Edge AI:

- OEE (Overall Equipment Effectiveness) Improvement: By reducing unplanned downtime and increasing quality yields, Edge AI typically boosts OEE by 10–15%.

- Labor Efficiency: AI handles the “monotonous” task of visual inspection, allowing human workers to focus on complex problem-solving.

- Reduced Scrapping: Detecting a process drift early means fewer defective parts are produced, directly impacting the bottom line.

The Future: Software-Defined Manufacturing

We are moving toward a future where the hardware of a machine is secondary to the software that runs it. In this “Software-Defined” era:

- Digital Twins will be powered by real-time Edge AI data, creating a living simulation of the entire factory.

- 5G Private Networks will provide the ultra-reliable, low-latency backbone for thousands of edge devices to communicate peer-to-peer.

- Federated Learning will allow multiple factories to “learn” from each other’s data without actually sharing the raw data, preserving corporate secrets while improving AI accuracy.

Conclusion

Edge AI is the “brain” of the modern factory. By moving intelligence to the point of action, manufacturers can achieve levels of agility and precision that were previously impossible. As highlighted by IoT Analytics, the leaders in this space are those who move beyond the “Pilot Purgatory” and successfully integrate AI into their core operational workflows.

The transformation is clear: The factory of the future will not be judged by its steel and motors, but by the intelligence of its edge.

Key Takeaways for Manufacturing Leaders

- Determinism at the Edge: For tasks like motion control or high-speed sorting, the Edge Node is non-negotiable. The “jitter” (variability in timing) of a cloud connection could cause a robot to miss a part or collide with an object.

- The “Data Tax”: Sending raw high-frequency vibration data (e.g., 20kHz sampling) to the cloud is expensive. Edge nodes reduce this cost by 90% or more by only sending the “features” or anomalies detected.

- Closed-Loop Learning: The system is circular. The Cloud provides the Strategy (the trained model), while the Edge provides the Tactics (the real-time execution).

Reference Summary

- IoT Analytics: Insights on Industrial AI market trends and the shift toward software-defined manufacturing.

- NVIDIA/Intel Whitepapers: Hardware benchmarks for edge inference.

- Industry 4.0 Standards: OPC-UA and MQTT integration protocols.

- Case Studies: Predictive maintenance implementations in automotive and semiconductor industries.