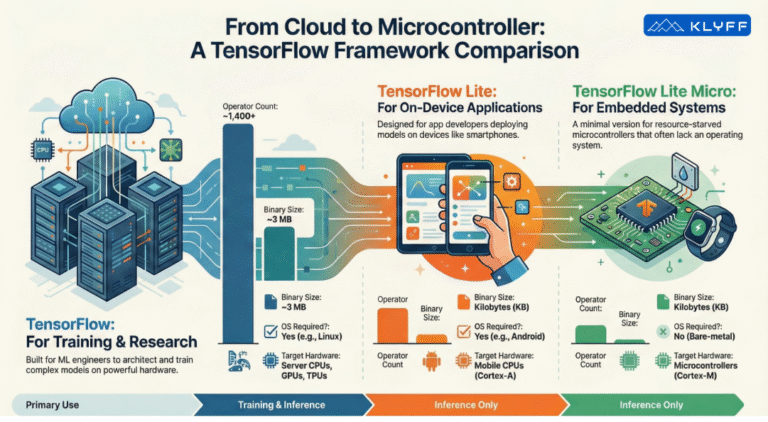

As a developer or engineer stepping into the world of machine learning, you rely on powerful frameworks. But when shifting to TinyML—deploying models onto mobile devices or microcontrollers—every single kilobyte and every single operation counts. This efficiency mandate requires optimizing not just the model, but also the infrastructure or runtime that executes that model during inference time.

This is why Google’s ecosystem splits into three distinct tools: TensorFlow (TF), optimized for training; TensorFlow Lite (TFLite), optimized for application deployment on mobile; and TensorFlow Lite for Microcontrollers (TFLite Micro), custom-designed for the deepest end of embedded systems.

The distinctions arise from their core purpose: TF is designed for researchers architecting models, while TFLite and TFLite Micro are designed for application developers focused purely on inference. TFLite Micro is specifically engineered for embedded systems that often lack a supportive operating system and have extremely limited memory.

Here is a detailed breakdown of the technical differences across the Model, Software, and Hardware categories.

Technical Distinctions in the TensorFlow Ecosystem

1. Model Structure and Operation

The modeling differences reflect the separation between the flexible, experimental needs of training versus the fixed, efficient needs of deployment.

Feature | TensorFlow (TF) | TensorFlow Lite (TFLite) | TensorFlow Lite Micro (TFLite Micro) |

|---|---|---|---|

| Primary Focus | Training and model architecture. Optimized for the training side. | Extremely optimized for inference and application deployment. | Extremely optimized for inference. |

| Weight Updates | Weights are constantly changing via back propagation and gradient descent. | No weight updates are performed during deployment. | No weight updates are performed during deployment. |

| Network Structure | Capability exists to change the network structure (e.g., trying new operations or using dropout). | Network structure is fixed, as the model has already been designed and deployed. | Network structure is fixed. |

| Operator (Op) Support | Supports over 1,400 ops to enable exploration during training. | Supports a handful of ops (almost 130). | Supports only 50 ops. |

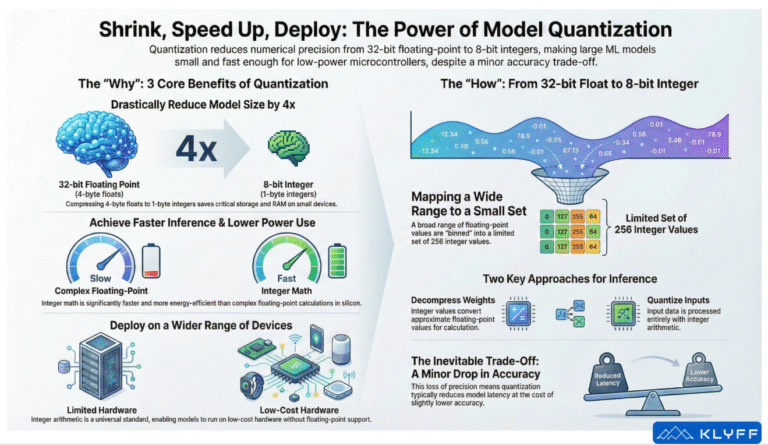

| Quantization Support | Does not natively support any specific quantization. | Quantization is natively supported, with optimization APIs present. | Quantization is natively supported, with optimization APIs present. |

TensorFlow Operations, also known as Ops, are nodes that perform computations on or with Tensor objects. After computation, they return zero or more tensors, which can be used by other Ops later in the graph. To create an Operation, you call its constructor in Python, which takes in whatever Tensor parameters needed for its calculation, known as inputs, as well as any additional information needed to properly create the Op, known as attributes. The Python constructor returns a handle to the Operation’s output (zero or more Tensor objects), and it is this output which can be passed on to other Operations or a Session.run

2. Software Infrastructure and Footprint

To save memory on resource-constrained devices, TFLite and TFLite Micro strip away all unnecessary components required for complex cloud training.

| Feature | TensorFlow (TF) | TensorFlow Lite (TFLite) | TensorFlow Lite Micro (TFLite Micro) |

|---|---|---|---|

| Base Footprint | Large base footprint. | Base footprint is an order of magnitude smaller than TensorFlow. | Base footprint is an order of magnitude smaller than TensorFlow. |

| Binary Size | Approximately 3 megabytes. | Binary size is in the order of a few kilobytes (extremely optimized software infrastructure). | Binary size is in the order of a few kilobytes (extremely optimized software infrastructure). |

| Operating System Need | Requires an OS (e.g., Linux, Windows) because it relies on features like dynamic memory allocation. | Typically runs on OS (e.g., Android, iOS) which support dynamic memory allocation. | Does not need an operating system. Designed to be lean and efficient on microcontrollers. |

| Distributed Compute | Typically runs on OS (e.g., Android, iOS) that support dynamic memory allocation. | Distributed compute capability is removed, as inference typically runs on a single device. | Distributed compute capability is removed. |

3. Hardware Optimization

The optimization targets drastically change based on the available processing power, moving from powerful cloud processors to minimalist embedded chips.

| Feature | TensorFlow (TF) | TensorFlow Lite (TFLite) | TensorFlow Lite Micro (TFLite Micro) |

|---|---|---|---|

| Target Architecture | The ability to delegate to hardware accelerators exists. | Optimized for mobile devices. | Custom optimized for embedded systems. |

| Target Processors | x86 architectures, GPUs, and custom-built Google TPUs. | Cortex A processors (application class processors) and x86 processors. | Cortex M-Class microcontrollers (from ARM) and Digital Signal Processors (DSPs). |

| Delegation | Ability to delegate to hardware accelerators exists. | The ability to delegate to hardware accelerators exists. | Soon going to have the ability to deploy onto custom micro neural processing units on embedded microcontrollers. |

In summary, the transition from TensorFlow to the TensorFlow Lite ecosystem highlights the fundamental difference between building a model and deploying it. While TF allows researchers to run computationally intensive training on powerful hardware, TFLite and TFLite Micro are meticulously stripped-down runtimes designed to ensure that even the smallest microcontroller can execute a fixed model efficiently, proving that sometimes, less is truly more.