Artificial Intelligence is undergoing a profound migration. For years, the AI narrative has been dominated by large language models (LLMs) residing in massive data centers. However, a significant shift is underway—a transition from the digital realm into the tangible environment. This is the era of Physical AI, a convergence of digital intelligence and hardware that represents the next great frontier of technology.

As organizations face limitations in cloud computing—such as latency, bandwidth costs, and privacy concerns—the “edge” has emerged as the critical battleground. This article explores why AI at the edge is vital, how Physical AI serves as a technological inflection point, and the ways it is fundamentally reshaping global industries.

The Shift to the Edge: Beyond the Cloud

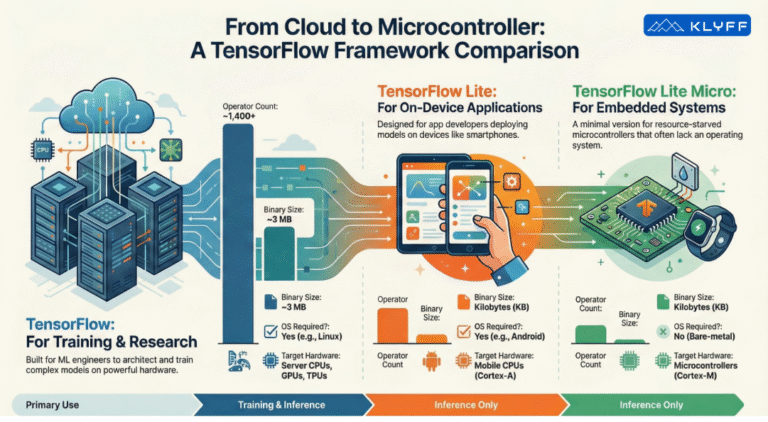

The “edge” refers to processing data locally on devices rather than sending it to a centralized cloud. While cloud computing powers vast generative models, it is becoming increasingly clear that the inflection point for practical AI lies at the edge.

Three immutable factors are driving this shift: the explosive growth of data, the limitations of network speed (latency), and the rising tide of security and regulatory requirements. For real-time applications—whether in a self-driving car or a manufacturing robot—round-trip data travel to a cloud server is too slow and expensive.

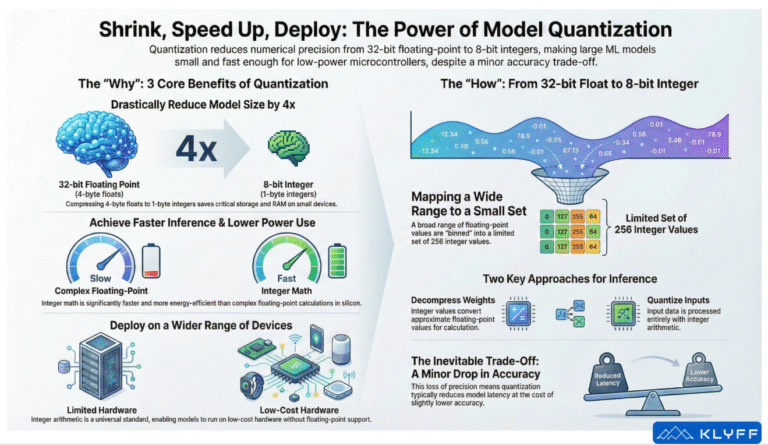

To address this, the industry is moving toward “computational factories” at the edge, utilizing specialized semiconductors and parallel GPU architectures that offer high throughput with low power consumption. Innovations in hardware, such as Neural Processing Units (NPUs), now allow devices to run sophisticated models locally, democratizing access to AI and enabling deployment in resource-constrained environments.

Physical AI: The Inflection Point

Physical AI is defined as the integration of AI into the physical world, where autonomous systems perceive, understand, reason, and act. It is the inflection point where machines evolve from following rigid, pre-programmed rules to becoming adaptive, intelligent agents.

From Rule-Based to Embodied Intelligence

Historically, industrial robots were “rule-based,” capable of speed and precision but lacking flexibility; they failed when faced with unpredictable variability. The breakthrough lies in Embodied AI, a subcategory of Physical AI where a machine’s intelligence is shaped by its body and real-world experiences.

Recent advancements in Vision-Language-Action (VLA) models, such as Google DeepMind’s Gemini Robotics, illustrate this leap. These models allow robots to possess “embodied reasoning,” enabling them to understand natural language instructions, navigate 3D space, and manipulate objects with human-like dexterity. For instance, a robot can now look at a coffee mug, intuit the correct two-finger grasp, and plan a safe trajectory to pick it up, generalizing from limited demonstrations to handle entirely new objects.

Generative AI plays a crucial role here, providing the “world understanding” necessary for robots to handle unstructured environments. By using “world foundation models” and synthetic data generated in physics-based simulations (like NVIDIA Omniverse), developers can train robots in virtual environments to master complex tasks before they ever touch physical hardware.

Why It Is Important: Resilience and Autonomy

The rise of Physical AI is not merely a technical upgrade; it is a strategic imperative. It addresses critical global challenges, including labor shortages, supply chain fragility, and the need for sustainable productivity.

- Closing the Autonomy Gap: Physical AI enables “context-based” robotics capable of zero-shot learning—executing tasks they have never seen before without explicit reprogramming.

- Safety in High-Stakes Environments: In sectors like fusion energy, where control systems must handle extreme volatility, hybrid architectures combine data-driven AI with physics-based models. These “interface contracts” ensure that AI operates safely within defined boundaries, handing over control to safeguards if uncertainty rises.

- Economic Viability: By simplifying deployment through virtual training and intuitive interfaces, Physical AI reduces the engineering effort required to automate tasks, making robotics accessible to small and mid-sized enterprises (SMEs).

How Physical AI is Changing Industries

We are witnessing a paradigm shift where AI moves from being a digital assistant to a physical laborer.

1. Manufacturing and Logistics

The impact on manufacturing is visible in the transition from static assembly lines to adaptive ecosystems.

- Foxconn has deployed AI-powered robotic arms that use digital twins to master high-precision tasks like screw tightening and cable insertion—tasks previously considered too complex for automation. This approach reduced deployment time by 40% and defect rates by 25%.

- Amazon operates the world’s largest robotics fleet, utilizing physical AI to orchestrate end-to-end fulfillment. Systems like “Sparrow” use computer vision to handle millions of different inventory items, while autonomous mobile robots (AMRs) like “Proteus” navigate safely alongside human workers. This integration has led to 25% faster delivery speeds and the creation of new, higher-skilled roles.

2. Healthcare

In healthcare, Physical AI is revolutionizing patient care and surgery. Surgical robots are learning to perform intricate tasks like suturing through imitation learning, while AI-powered prosthetics and wearables offer real-time health monitoring and gait support.

3. Infrastructure and Smart Cities

Physical AI is enabling smarter urban planning and maintenance. Drones equipped with neuromorphic vision can detect pests in agricultural fields or inspect critical infrastructure like bridges and pipelines for defects. In smart cities, edge AI optimizes traffic flow and energy distribution, enhancing sustainability and livability.

The Path Forward: Trust and Workforce Transformation

As Physical AI systems become more autonomous, ensuring their safety and trustworthiness is paramount. Unlike digital software, a failure in Physical AI can cause physical harm. Frameworks like the NIST AI Risk Management Framework are essential for mapping, measuring, and managing these risks, emphasizing characteristics such as safety, reliability, and explainability.

Furthermore, this technological wave requires a workforce transformation. As robots take over repetitive physical tasks, human roles are shifting toward supervision, system optimization, and “robot training,” necessitating widespread upskilling initiatives.

Conclusion

AI at the edge represents the maturation of artificial intelligence from a passive data processor to an active participant in the physical world. By granting machines the ability to perceive, reason, and act, Physical AI is unlocking new levels of productivity and resilience. As industries from manufacturing to healthcare integrate these embodied intelligences, we are entering a new age of industrial operations defined by the seamless collaboration between human intent and machine capability.

Analogy:

Think of traditional cloud AI as a brilliant architect who works in a distant office, sending blueprints (data) back and forth via mail. It is slow and detached from the construction site. Physical AI at the edge is like putting that architect directly on the building site, giving them hands, and teaching them to lay bricks. They can see problems immediately, adapt to the weather instantly, and get the job done without waiting for instructions from headquarters.