Model Compression & Optimization

Faster models on the Edge device within 4-6 weeks

What we do:

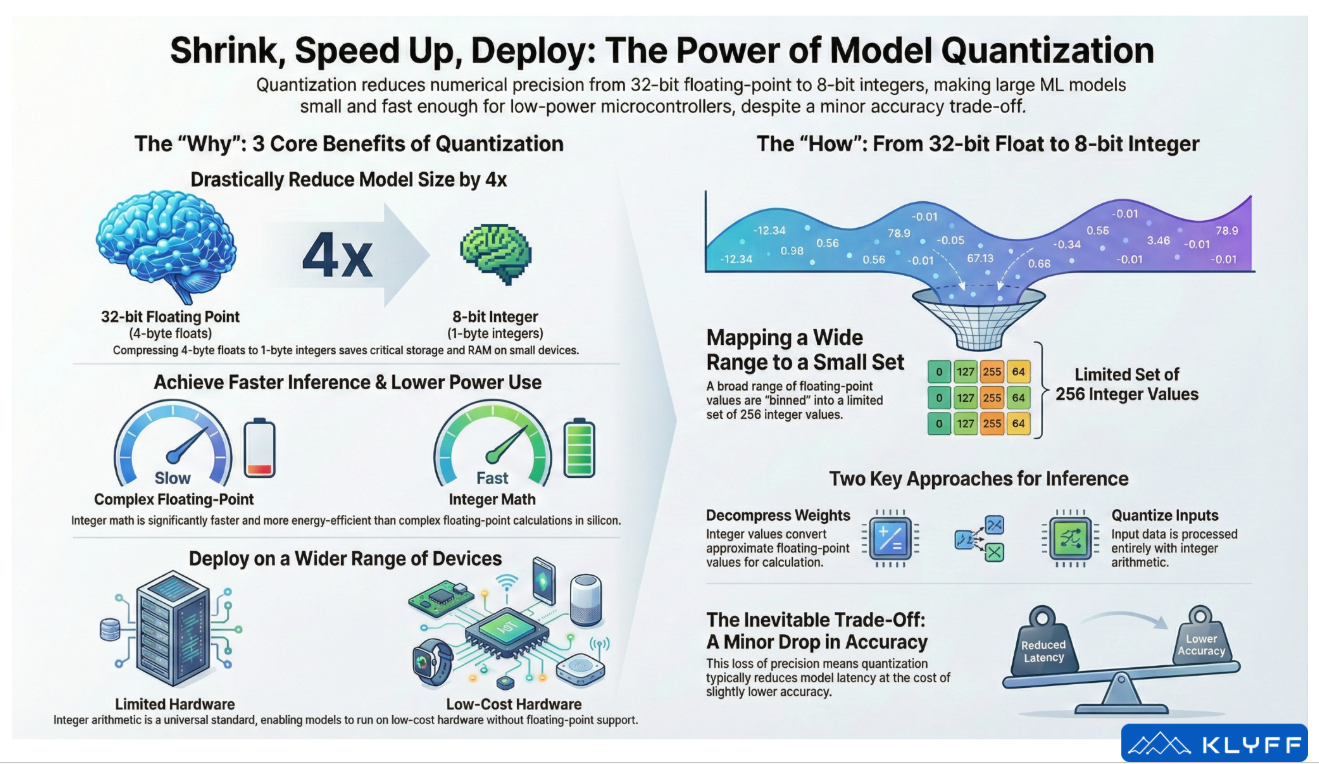

Quantization-aware training (QAT)

Quantization-aware training (QAT) integrates quantization directly into the training process, simulating low-precision arithmetic (e.g., 8-bit or 4-bit) so the model learns to be robust to quantization noise and maintains accuracy despite reduced bit-widths.

This contrasts with post-training quantization, which often causes sharp accuracy drops; QAT is essential when deploying to memory-constrained hardware like Jetson Nano, Google Coral, or microcontrollers that cannot afford significant accuracy loss.

The result is a production-ready model that is 2–8x smaller and 2–5x faster while preserving the accuracy needed for safety-critical or inspection-sensitive

Neural Architecture Search (NAS)

Neural architecture search automates the discovery of optimal model architectures under real device constraints (memory, latency, power, and compute)—rather than relying on manual trial-and-error or generic model selection.

Hardware-aware NAS explicitly encodes constraints, such as “≤256 KB RAM and ≤5 ms latency on Cortex-M4,” and discovers architectures that meet these bounds while maximizing accuracy.

For manufacturing edge deployments, this yields compact, efficient models that run in real-time on the exact hardware in the factory without over-provisioning.

Hardware Specific Optimization

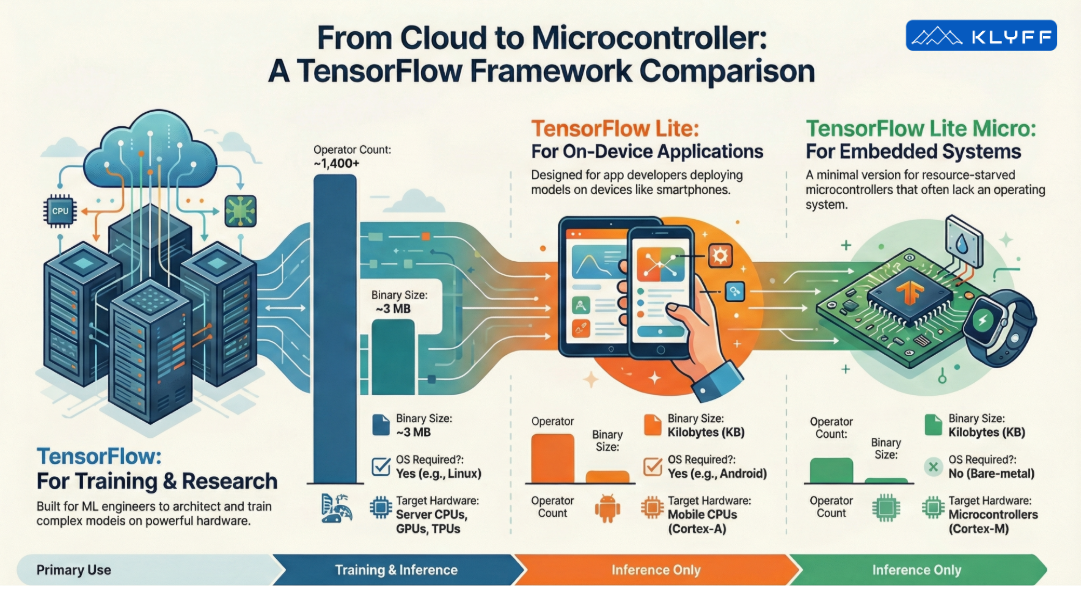

This service uses vendor-specific toolchains and kernels (TensorRT, Coral Compiler, OpenVINO, CMSIS-NN, Arm Ethos-U) to map models onto each platform’s fastest operators, tensor layouts, and hardware accelerators.

Layer fusion, precision auto-calibration (FP16/INT8), and kernel auto-tuning are applied per-device, yielding 2–10× latency improvements and 30–70% power reduction compared to generic deployments.

The model behaviour remains identical; only the execution path and performance change.

Accuracy vs. Latency Tradeoff Analysis

This structured process characterizes how model accuracy changes when shrinking networks, reducing precision, pruning channels, or adjusting batch sizes—then plots these changes against measured latency and throughput on real hardware.

Teams use this data to choose evidence-based operating points (e.g., “95% accuracy at 18 ms” versus “97% accuracy at 32 ms”) that align with product SLAs and manufacturing requirements.

This prevents over-optimization (wasting compute for 0.1% additional accuracy) and under-optimization (missing critical defect detection).

Performance benchmarking

Benchmarking runs standardized test suites on target devices to measure end-to-end latency, throughput, memory footprint, and thermals under realistic workloads and batch sizes.

These measurements expose bottlenecks in preprocessing, model execution, postprocessing, and I/O, enabling targeted optimization and validating that changes improve user-visible performance, not just synthetic metrics.

For manufacturing, this includes testing on actual production images/data to ensure real-world defect detection accuracy and speed.

Expected outcomes:

50-70%

Model size reduction

<50ms

Inference latency on target hardware

<7%

Deployment-ready model artifacts

100%

Deployment-ready model artifacts

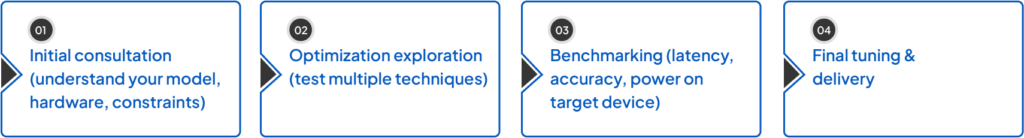

Our engagement process:

Selected Customer Success Stories

Adaptive Predictive Maintenance

Solder Joint Inspection