In our previous posts, we went through the various steps in building a successful Edge AI roadmap from framing the problem to building a dataset to the considerations of design and development. In this post, we will cover the process of evaluating, deploying, and supporting the Edge AI applications.

Evaluation

Is the key to the success of the system. Participation of the stakeholders and end users is essential throughout the build-up. Evaluation is conducted at every stage when building an Edge AI system. This helps in understanding how much benefit the edge AI system would bring. Evaluating potential algorithms. Iterative development to evaluate whether we are building the right system right. Changes before and after optimization. When do we have to implement lossy optimization techniques? On experimental and production hardware systems, at the time of deployment and post-deployment evaluating the performance of the system and making changes as needed

Evaluation includes individual components like windowing, downsampling, DSP, ML, Postprocessing, and rule-based algorithms. The collaboration and integration of these individual systems when working together also need to be evaluated. The ML algorithm and the rule-based algorithm may work well when tested in isolation. When they are combined they might not give the expected results.

Testing would include simulated environments for testing the product with near-real and synthetic data. Real-world testing happens when the product goes through QA testing and when it is in the hands of real users as Usability testing. Once we are beyond this phase then the system needs to be tested and monitored in the real world by observing and monitoring the production deployment.

Metrics

There are quite a few metrics that are applicable to the Edge AI systems

- Algorithmic

- Classifier Models

- Loss – way to measure the correctness of a model, the higher the loss, the more inaccurate the model is

- Accuracy – to understand the performance of a model. It will depend on the dataset as well. If a model is 90% accurate on structured data and only 10% on unstructured then we might have to rework the model

- Confusion matrix – how the model is getting confused within different classes. If it was supposed to classify as yellow but it did it as blue or vice versa

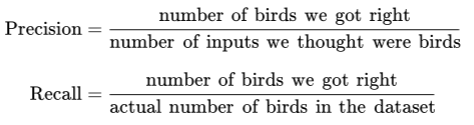

- Precision and recall – Precision is how frequently the model misses one class and identifies as another. Recall is how often the model misses the class altogether amongst the total dataset. Precision and recall, are both numbers between 0 & 1. The importance of Precision and Recall depends on the use case. For a smart speaker, the precision is important. It will be irritating to keep waking up on any random keyword when the wake sound is expected as “Alexa”. On the other hand, recall is important in the medical system. We would not like to miss any health issues if they exist in the dataset.

- Precision and Recall are related to the confidence score. A lower confidence score would mean lower precision but higher recall. We might be misclassifying some data inputs as something else but we would not be missing the data. In higher confidence, the precision would be high but the recall would be low

- Recall is also identified with True Positive Rate (TPR) and True Negative Rate (TNR).

- Regression models

- Error Metrics

- The Scikit learn metrics project contains a lot of other useful metrics based on the model

- Compute and hardware performance – This governs how quickly the algorithm is running and what resources it is consuming in the process. This allows us to decide how complex the algorithm can be and how should we provision infrastructure for the same.

- Memory Usage – RAM and ROM. ROM would store the actual algorithm and parameters for the ML model. Deep learning models are complex and big and would need to be finetuned and optimized to fit the ROM constraints. Similarly, once we run the algorithm we will get a high watermark for the RAM requirements

- FLOPs – Floating point operations per second. Given the FLOPs of a model and the FLOPs of the processor, we might be able to estimate the latency of the model on the processor

- Latency – Amount of time taken to run all parts of the algorithm end to end. For example, the time taken to capture the window, downsample it, run it through the DSP algorithm, feed the result to a deep learning model, execute the model, and process the output.

- Duty cycle – Edge devices need to preserve battery. They wake up for some time process data and go back to sleep again. This wake/sleep pattern is known as the duty cycle. For example, a processor might wake up after 200 milliseconds, read data for 10 milliseconds, process it for 50 milliseconds, and go to sleep again for 200 milliseconds. Hence it wakes for 60 and sleeps for 200 milliseconds.

- Battery capacity – measured in mAh (milliamp hours) which means the number of hours the battery can sustain a 1 mA current. So for a 100 mA device, this battery would last for 20 hours.

- Thermal rating – how much heat the device would generate. Most SOCs have built-in thermal sensors and they can throttle their performance on the basis of that. MCUs don’t have this and have to be coupled with a sensor.

Deploying

Can be divided into a series of tasks depending on the stage of deployment

Pre-Deployment

- Define concrete objectives in consultation with the stakeholders

- Identify key metrics

- Methods of Estimating Performance

- Possible risks

- Define a recovery plan

- Deployment design – defining the software, hardware, and cloud deployments strategy

- Alignment with values – any ethical considerations

- Communication plan for upstream and downstream systems (“upstream” refers to the source or origin of data, code, or processes, while “downstream” refers to the systems or processes that consume or are affected by that data, code, or processes)

- Go/nogo decisions

Mid-Deployment

- Two-way communication – pass on information to anyone who might be affected and solicit feedback

- Staggered rollout – in stages rather than together

- Monitor metrics – measure and be ready to rollback

Post-Deployment

- Communicate – Share status and results frequently

- Monitoring – keep an eye out for risks and deviations that might occur

- Deployment report – original plans, what changed, how and where the deployment is done

Support

Deployment marks the beginning of the product’s support phase. It involves keeping track of the performance of the system over time. Drift is a natural phenomenon and should be dealt with. When drift happens our dataset is no longer the real representation and a new data set needs to be collected for the current conditions. To identify the drift, the calculation of summary statistics is handy. These are measurements like mean, median, standard deviation, kurtosis, or a skew of readings for a particular sensor. Summary statistics give an indication of whether the drift is happening or not. How much drift can be identified with algorithms defined in Alibi Detect.

Getting feedback about the working of the Edge solution might not be easy if there are bandwidth and privacy concerns. In this case, a sample of anonymized data might be sent over to understand the workings of the system in production. This call-home data would not have any privacy information. The sample data set would help us understand the real-world scenario, it is valuable in debugging algorithms that are deployed and it can also add to the dataset for fine-tuning our model further

Other application metrics are important for support. These include,

- System logs – metrics about the system like timings start and end, battery consumption, heat production, etc

- User Activity – interaction of users with the system

- Device actions – output produced on the device as a result of the algorithms running there

Final outcomes – most Edge AI systems have goals that go beyond the devices. Like a reduction in errors on the production line an increase in the accuracy of a security prediction or an improvement in the quality of the product.

Termination Criteria

Once the system is in production and is monitored continuously, there might be situations where pulling the plug is necessary. These include situations like

- The drift amount has breached the threshold thus rendering the model useless with faulty results

- Deviation in the predicted impact on associated systems

- The ROI on the business goal is not achieved

- Algorithmic limitations exposed that are detrimental to fairness

- Security vulnerabilities uncovered

- Problem understanding has evolved thus leaving the current solution useless

- Edge AI techniques have improved leaving the current system obsolete and more expensive to run

- The problem domain has changed

- Evolving cultural norms with some things like cameras now accepted in homes

- Changing legal standards at the place of implementation

Conclusion

Thus, as we saw the work of an engineering team does not end when the system is deployed. There is an enormous amount of support, monitoring, observing, and maintenance that goes hand in hand for the solution to keep working. Until one fine day when the system will be terminated because of any of the reasons that we saw.